Scientists just figured out how to predict personality traits and career success from a selfie. MBA graduates’ headshots were fed into an AI system that analyzed their faces and predicted their Big Five personality traits, then researchers tracked how their careers actually played out. Turns out, the AI wasn’t half bad at predicting who would succeed.

One of my favs, Malcolm Gladwell, wrote about this exact thing in “Talking to Strangers.” He references a study where an AI algorithm was fed data from roughly half a million bail cases (just age and criminal record, nothing fancy) and then compared against actual judges who got to meet the defendants face to face, look them in the eye, read their body language, all of it. The judges were trying to determine who was “high risk” and would either reoffend or skip bail.

Guess what? The algorithm won. It wasn’t even close. The people judges granted bail to were 25% more likely to commit another crime than the ones the computer would have picked. Turns out, all that looking people in the eye and trusting your gut? It actually made the judges worse at their jobs, not better.

So…here’s the uncomfortable question for LP pros: If AI can predict success from a photo, or if people will be repeat offenders….how long before it claims to predict theft?

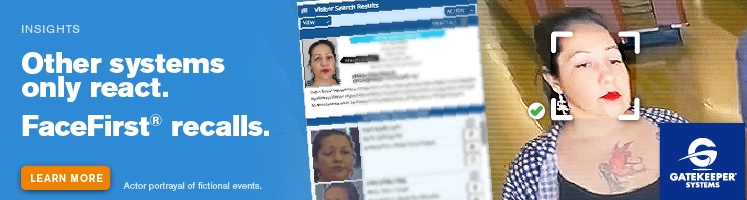

Actually, we’re already partway there. Behavioral AI systems are being deployed in retail right now, analyzing everything from how people walk to their hand movements and facial expressions to flag “suspicious behavior.” One system claimed it reduced shoplifting losses by 77% during testing, though I’d love to see the methodology behind that number before we pop the champagne.

But here’s where it gets really interesting. These current systems analyze behavior in real time. What happens when someone figures out how to skip that step entirely? When AI claims it can look at someone’s face… not their actions, just their face… and determine theft risk before they even walk through the door?

We’re about to enter an era where your face becomes evidence. Not evidence of what you did, but evidence of what an algorithm thinks you might do. (and they said it wouldn’t be like Minority Report…..yeah, ok.)

The technology is advancing faster than our ability to have honest conversations about it. Faster than regulations can keep up. Faster than most LP professionals are even aware of what’s coming down the pipeline. I’d love to say THAT part isn’t coming….but to be honest, it’s already blowing my mind on what it can do.

Questions to consider if we are headed down this path: Are we ready for the lawsuits? The discrimination claims? The privacy concerns? Will AI systems truly be unbiased? Are we prepared to defend using technology that makes judgments about people before they’ve done anything wrong?

I’m just saying, a version of these implications are coming whether we like it or not. Maybe it’s worth thinking about what your vendors are actually selling you and how it works. Maybe it’s worth asking some questions about accuracy and bias and who ends up holding the bag when things go sideways. AI vendors are certainly coming out of the woodwork – pick one you can trust.

Your face is already telling a story. But is it one you want AI to know?